Innovation’s Ethical Frontier: The Responsibility of Design in an Accelerating World

When humanity arrives at the doorsteps of these massive technological leaps—whether it’s artificial intelligence, quantum computing, or breakthroughs in energy—the question isn’t just how we innovate, but why and at what cost. I recently read a book by Batya Friedman, founder of the Value Sensitive Design Lab, and in it she posed a question central to this dialogue: Who is ethically responsible for guiding the use of a new discovery or innovation?

It’s a question that touches every corner of the innovation ecosystem, from technologists and product managers to governments and global organizations. And it’s never been more relevant than now, as we grapple with the transformative powers of AI, quantum computing, and renewable energy breakthroughs.

In this article, we’ll explore these questions: Can ethics coexist with progress, or does responsible innovation inherently require trade-offs? If so, does the market value ethics enough to make those trade-offs? How do we prevent bad actors from exploiting these tools while incentivizing good actors to maintain high ethical standards? And what role should product leaders play in navigating these challenges?

The Ethical Dilemma of Innovation: Who’s Accountable?

Technological progress doesn’t happen in a vacuum. Every new discovery opens a Pandora’s box of possibilities, both positive and negative. For instance, cookies, and no not the delicious chocolatey kind but instead the now-ubiquitous feature of web browsing—were originally created as a debugging tool to track website functionality. They were a simple mechanism for developers to ensure that users had consistent experiences across web pages. Over time, however, this innocuous innovation evolved into the backbone of behavioral tracking and targeted advertising, reshaping the entire digital economy.

The transformation of cookies is a microcosm of what happens when tools designed for one purpose evolve in ways their creators didn’t foresee. This is a cautionary tale for product leaders: today’s design decisions can have far-reaching and unintended consequences. The question is, how can we innovate while anticipating and minimizing these risks?

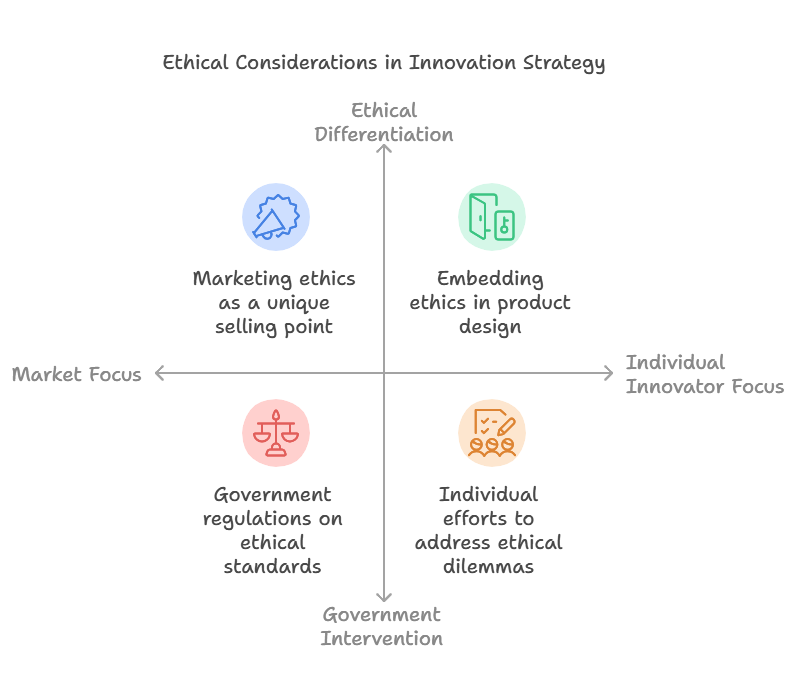

This accountability question is complex. Should the responsibility fall on:

- The Market? Should ethical considerations become a product differentiator, appealing to customers and investors who prioritize responsible innovation?

- Governments? Are ethical dilemmas a “commons problem” that requires intervention because no single actor has sufficient incentive to solve it alone?

- Individual Innovators? How can product leaders, architects, and engineers embed ethics into the DNA of their products, even in a hyper-competitive landscape?

The reality is likely a mix of all three. Yet, history shows that when left unchecked, market forces often prioritize speed and scale over responsibility. The nuclear arms race provides a stark example driven by the logic of “if we don’t, they will,” nations pushed forward with development until mutually assured destruction forced cooperation.

Can we avoid a similar trajectory in the AI and quantum era?

Ethics in AI: Lessons from Anthropic and OpenAI

AI offers a contemporary example of how ethical ambitions can be challenged by market realities. Anthropic and OpenAI were founded with a strong emphasis on AI safety, aiming to build systems aligned with human values. But as they evolved, even these organizations faced criticism for prioritizing commercial objectives to secure funding and remain competitive.

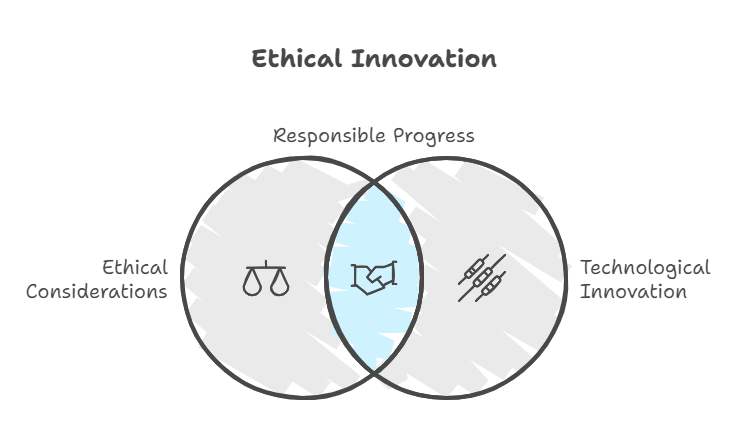

Does this mean there’s no market for ethical AI? Not necessarily. Instead, it reveals a critical tension: markets reward utility, not necessarily safety and it's when they intersect that we can have both.

Consider these dynamics:

- Businesses want AI solutions that deliver immediate, measurable results—streamlined operations, reduced costs, and enhanced customer experiences.

- Investors demand scalable products that drive revenue quickly, often at the expense of longer-term safety features.

This creates a significant challenge for innovators: how do we incentivize ethical and safe AI when the market doesn’t naturally prioritize it?

Hardware-Based Constraints: A Practical Solution

One potential path forward lies in hardware-based constraints. Engineers and architects could design systems where ethical limits are physically baked in:

- GPUs with Built-In Limits: Hardware could enforce safety protocols, such as limiting the computational power available for certain types of high-risk tasks unless specific ethical criteria are met.

- Mathematical Proofs in Hardware: Devices could use tamper-proof cryptographic methods to ensure AI systems adhere to predefined constraints, such as prohibiting malicious or harmful outputs.

- Auditable Chips: Hardware could include built-in auditing features, allowing regulators or independent third parties to verify that systems are operating within ethical boundaries.

These solutions aren’t just theoretical. Companies like Nvidia are already exploring ways to integrate safeguards into AI-capable GPUs. For product leaders, this presents an opportunity to collaborate with hardware engineers and ensure ethical considerations are part of their product’s foundation.

Unintended Consequences: Anticipate the Unknown

Every major innovation brings unintended consequences, often outstripping the initial vision of its creators. Cookies transformed from a debugging tool into the foundation of invasive tracking systems, fundamentally altering privacy norms in the digital age.

What does this teach us? Anticipating unintended consequences is as critical as designing for intended use cases. For AI, quantum computing, and other emerging technologies, this means thinking holistically:

- What secondary applications might emerge from this technology?

- How could bad actors exploit it in ways we don’t currently anticipate?

- Are there design safeguards we can implement now to mitigate these risks?

Product leaders must embrace the mindset of scenario planning and phased testing—imagining not just the best-case scenarios but also the worst as you test those in controlled ways within the market. By embedding flexibility and constraints into systems today, we can help ensure technologies evolve in ways that align with societal values.

The Arms Race Problem: Competing Incentives in a Global Landscape

As discussed previously, competitive pressures amplify the ethical challenges of innovation. Companies often find themselves in an arms-race mentality: “If we don’t do it, someone else will.” Governments, too, are locked in a similar dynamic, particularly in areas like AI and quantum computing.

This mindset is dangerous. It prioritizes survival over long-term stability, leading to unchecked progress with potentially catastrophic consequences. To counter this, we might draw lessons from history:

- Mutually Assured Destruction (MAD): During the nuclear arms race, the threat of annihilation eventually brought nations to the negotiating table. Survival, not altruism or market forces, drove cooperation.

- The Apollo Effect: The moon landing gave humanity a rare glimpse of its shared fragility, fostering collaboration and inspiring global goodwill. Could similarly unifying achievements—like tackling climate change or creating renewable energy solutions—reframe the innovation narrative today and provide humanity with a much needed 'firmware' upgrade?

The question for product leaders is: Can we design incentives that align ethical innovation with survival, progress, and economic growth?

Looking Ahead: The Role of Product Leaders in Shaping the Future

The pace of technological change is accelerating. Breakthroughs in quantum computing, AI, and energy promise transformative possibilities, but they also raise critical ethical questions.

The Stakes of Quantum-Powered AI

Quantum computing will amplify AI’s capabilities, enabling:

- Medical Breakthroughs: AI models trained on quantum systems could unlock cures for complex diseases.

- Economic Efficiency: Financial systems could predict and respond to market shifts in real time.

- Planetary Innovation: Technologies like terraforming and global climate modeling could become realities.

But with these advances come risks. How do we ensure these tools are used responsibly? How do we prevent bad actors from exploiting them for harm?

Designing for Trust and Transparency

Product leaders must embed trust and transparency into their designs. For example:

- Ethics in AI Assistants: AI tools should prioritize user control, offering transparency like explainability auditing which users can go to and better understand the data used and decision-making processes.

- Quantum Systems for Good: As we explore new domains, lets focus things like Quantum applications on high-impact, ethically sound domains like healthcare and climate innovation where the outcomes can more easily be a net good.

These choices will determine whether new technologies uplift society or exacerbate its divides.

Conclusion: Building a Responsible Future

Innovation and safety don’t have to be at odds. In fact, when approached thoughtfully, they can reinforce one another. As product leaders, we have the power to reshape the narrative: safety and ethics aren’t trade-offs—they’re opportunities. By embedding ethical considerations into the DNA of next-generation technologies, we create a path where safety becomes a key differentiator.

If we can find ways to market ethics and safety as integral features, these values can become competitive advantages. In high-stakes industries like AI and quantum computing, customers—whether consumers, enterprises, or governments—will flock to solutions they can trust. This shift would give responsible innovators a powerful edge, rewarding “good actors” and marginalizing those who prioritize reckless speed over long-term value.

The stakes are high, but so is the opportunity. The future of technology will be shaped not just by what we build, but by how we build it—and the standards we hold ourselves to. By aligning innovation with ethics, we can create a market where progress and responsibility go hand in hand, ensuring a safer, smarter future for everyone.