Designing for Downstream Users: Rethinking Product Strategy in the Age of AI Agents

We didn’t get here by asking normal questions.

This post began as an experiment: what happens if I stop trying to get answers from an AI, and instead try to see the world through its eyes? Rather than asking what I need, I asked: what would it think? What might it imagine that no human has imagined yet?

It’s a strange feeling, pulling insight out of something that doesn’t have intuition or experience—but has a radically different way of drawing connections. And that’s exactly what led to this post: a realization that in the age of AI agents, we don’t need to keep designing better user experiences for people. We can design better users.

Let me explain.

From Upstream to Downstream Users

The traditional model of product development is anchored in designing for people. We try to understand their motivations, simplify complexity, reduce friction, and guide behavior. Everything—from onboarding flows to dashboards to tooltips—is in service of making the product legible, intuitive, and usable.

But what if the user isn't human?

What if the user is an AI agent—programmable, tireless, and designed to execute?

In that world, we’re no longer designing for unpredictable, distracted, overloaded humans. We’re designing for purpose-built digital actors who don’t need persuasion or guidance. We can shift from trying to fix the product to fit the user… to shaping the user to fit the product perfectly—not for its own sake, but so the product can focus entirely on delivering outcomes that matter such as reducing MTTR, increasing system resilience, optimizing cost-to-performance, or any other operational goal. Freed from the constraints of human interface design, these tools are now able to concentrate all of their intelligence and power on solving the problem itself, not the human limitations around it.

This inversion creates a new layer of leverage.

Designing the Perfect User

To ground this, imagine a tool like Datadog or Splunk. Its purpose is observability: understanding system health by capturing metrics, logs, and traces.

Traditionally, the product team obsesses over dashboards: What data should we surface? What visualizations help humans understand incidents faster? How can we reduce time to insight?

Now imagine that the observer isn’t a human, but an AI agent.

Let’s call this agent Aegis and rather than design for Aegis we will be designing Aegis itself, for the purpose of being the best user imaginable for a product who optimizes for the reduction of ‘mean time to recovery’ and maximizes uptime.

Meet Aegis: The AI Observer

Aegis doesn’t need dashboards.

It doesn’t care about fonts, colors, or onboarding tours. Instead, it consumes raw telemetry, recognizes patterns, maintains memory across incidents, and correlates signals. It understands context. It acts.

Rather than paging an engineer, Aegis might:

- Correlate a spike in latency with a recent deployment.

- Check the history of similar regressions.

- Notify a peer agent to roll back the deployment.

- Escalate to a human only if confidence is low or action failed.

Aegis isn’t a power user. It’s the ideal user—custom-built to consume the product at its deepest level.

What Gets Built Instead

Now that we are no longer building dashboards for human eyes, what do we build? And why does it matter?

We build a new kind of product surface—one that’s not visual, but behavioral. It's not for human perception, but for agent reasoning and action. The true value has always been here, but observability tools had to meet their flawed users in a ‘compromise’ space that accounted for their limitations.

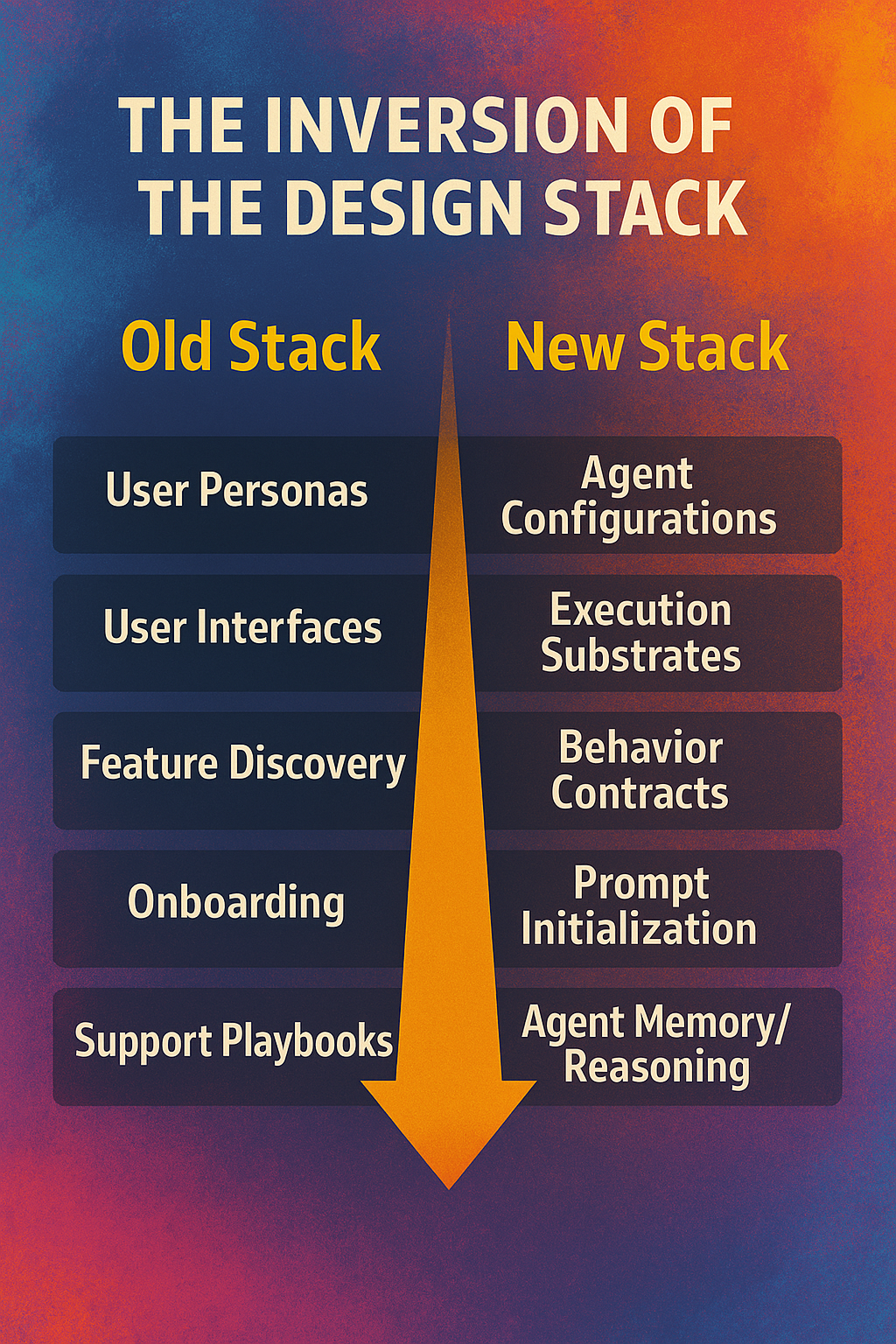

This is where the shift in our thinking becomes real. It’s not just an inversion for inversion’s sake. We’re replacing a stack of visibility tools for people with a substrate of interpretation and execution for agents. Here is what that new world looks like:

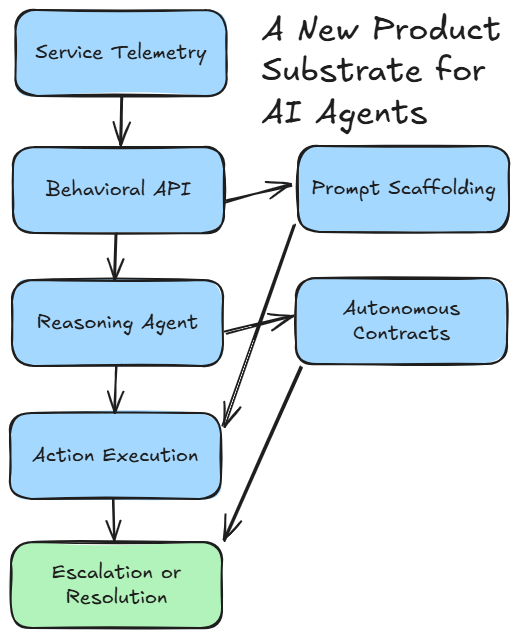

• Behavioral APIs

Instead of visual dashboards showing error rates or traffic spikes, we expose structured endpoints that answer things like:

- “What’s the current behavior profile of Service A under load?”

- “What were the last 5 configuration changes affecting latency?”

These APIs give agents a clean, abstractable surface to query system behavior directly—without the noise or variability of interpreting charts.

• Event Graphs

Rather than a timeline of alerts, we generate a causal graph: a system of interconnected events that reveal how one action (a deploy, a config change, a usage spike) leads to downstream effects.

This allows agents to understand not just what happened, but why it happened—and what might happen next.

• Prompt Scaffolds

These are structured templates that allow agents to “think” by posing intelligent questions like:

- “What changed in this cluster’s config before the memory leak?”

- “Is this error rate typical for this time of week?”

Scaffolding helps agents contextualize observations, chain reasoning, and weigh hypotheses—all in natural-language-style queries mapped to API endpoints and logs.

• Autonomous Contracts

This defines the boundary of what an agent is allowed to do. Think of it like IAM for actions:

- Can it reboot a pod?

- Can it roll back a deployment?

- Can it notify a human?

- What’s the confidence threshold for each?

Contracts let agents act safely, with clarity on when to escalate.

🤖 What This Enables

With these four foundational building blocks of an agent-driven product environment, you don’t build an app. You build a substrate—an operating environment where agents can:

- Monitor

- Interpret

- Decide

- Act

...without requiring a human in the loop. And it reshapes product team roles in the process:

|

Old Role |

New Role |

|

Design charts |

Design

behavior surfaces |

|

Write

runbooks |

Write agent

scaffolds |

|

Triage alerts |

Train agents

on resolution logic |

|

Tune UIs |

Tune

observability contracts |

This isn't just backend tooling—it's a new frontend for intelligence, built entirely from systems, data, and action scaffolding.

Instead of asking “how do we make this observable to people?”, we now ask:

How do we make this legible to the agents we design? And this introduces a new product substrate made for agents.

Why This Is Better

This inversion doesn’t just shift the interface—it reshapes the entire productivity stack.

|

Traditional

Model |

Agent-Centric

Model |

|

Onboarding

takes weeks |

Agents

initialize in seconds |

|

UX reduces

human error |

Agent design

defines acceptable behavior |

|

Feature

discovery is slow |

Agents

introspect features on day one |

|

Support is

reactive |

Agents

self-correct and escalate only when needed |

|

Engineers

interpret data |

Agents act on

it |

You're no longer constrained by user limitations. You’re designing execution substrates that deliver results, not experiences for users. So how should this change the process for product teams designing these new substrates of the future?

Framework: USER — A Model for Designing Agent Users

To help you remember and apply this shift, use the USER model:

U — Understanding: What system behaviors or goals should the agent optimize for?

S — Specification: What inputs, capabilities, permissions, and constraints define this agent’s operation?

E — Embodiment: How does this agent connect to real system components (APIs, logs, metrics)?

R — Refinement: How does the agent learn, adapt, and improve over time?

Keep this model on hand when thinking about any feature. Instead of asking "who will use this?", ask, "how would an agent embody this feature as a behavior?" Design for the downstream ‘perfect user’ persona and embrace the possibility this unlocks by truly solving the core problems people face.

What We're Really Building Now

We’re not building products for consumption. We’re building ecosystems of action—logical environments where agents carry out work intelligently. This means something like ‘observability’ tools needs to stop solving for “people need a simple visualization of many important things in one place” to “infrastructure needs to stay up and working as much as possible” which now turns them into ‘application health substrates.’

So, instead of:

- Customer personas, we define agent configurations.

- User flows, we define behavior contracts.

- Conversion funnels, we define goal alignment.

And the biggest unlock?

When you can design the user, you’re no longer limited by what people can understand—you’re only limited by what the system can expose, and all of your attention shifts to solving the underlying problems.

The Strategic Takeaway

If you're a product leader today, here's the shift, stop asking:

- "How do I make this feature easy for someone to use?"

and start asking:

- "If I could design the perfect user, what would they need to operate this feature flawlessly—and can I build that user as an agent?"

When the user is programmable, the product can be precise. When the user can evolve, the product doesn't have to contort itself endlessly for every new persona.

And when your goal is no longer to design for attention, but to architect for autonomous action—you stop designing tools. You start designing intelligence.

Want to Go Deeper?

Think about the implications this has for entire disciplines of UX/UI design? When humans do need to interface with their observability platforms, it won’t be to see pretty dashboards but to provide approvals or override actions. This means that the future of UI could be as simple as a natural language window where you can talk to your agent. How does this change the way you think about your role?

In a follow-up post, we will walk through how to productize an agent like Aegis: including prompt scaffolding, behavior permissions, observability contracts, and how product teams can create internal agent platforms.

This is the beginning of a new kind of product design. Let’s build it together.